Computing has come a long way since the days of the abacus. The history of computing is a fascinating story of innovation, invention, and evolution. From the humble beginnings of the abacus to the sophisticated artificial intelligence systems of today, computing has transformed the way we live and work.

The evolution of technology has been a key driver of the history of computing. From early inventions in computing such as the steam engine to modern breakthroughs such as quantum computing, technology has enabled the computing industry to achieve incredible milestones.

The evolution of technology has been a driving force in the history of computing. As technology has advanced, so too has the computing industry. For example, the invention of the steam engine in the 18th century made it possible to power machines and factories, which led to the development of mechanical calculators and the first mechanical computers in the 19th century.

Computer pioneers have also played an essential role in the history of computing. These are the individuals who had the vision, drive, and technical expertise to develop new technologies and push the boundaries of what was possible.

One of the earliest technology milestones in computing history was the invention of the abacus. The abacus is a simple device consisting of beads that slide along rods. It was invented over 5,000 years ago in ancient China and was used to perform basic calculations.

In the 19th century, Charles Babbage, often considered the father of computing, designed the first mechanical computer, the Analytical Engine. Although the machine was never built, it laid the foundation for modern computing by introducing the concept of a stored program and using punch cards to input data.

The first mechanical computer was designed by Charles Babbage in the 1830s. Babbage’s Analytical Engine was a programmable mechanical calculator that used punch cards to input data and instructions. Although the machine was never built during Babbage’s lifetime, his ideas laid the foundation for modern computing.

The 20th century saw the development of electronic computers, which used vacuum tubes and later transistors to process information. The first electronic computer, the ENIAC, was built in 1946 and was used to perform complex calculations for the military. The development of the microprocessor in the 1970s made it possible to build computers that were smaller, faster, and more affordable.

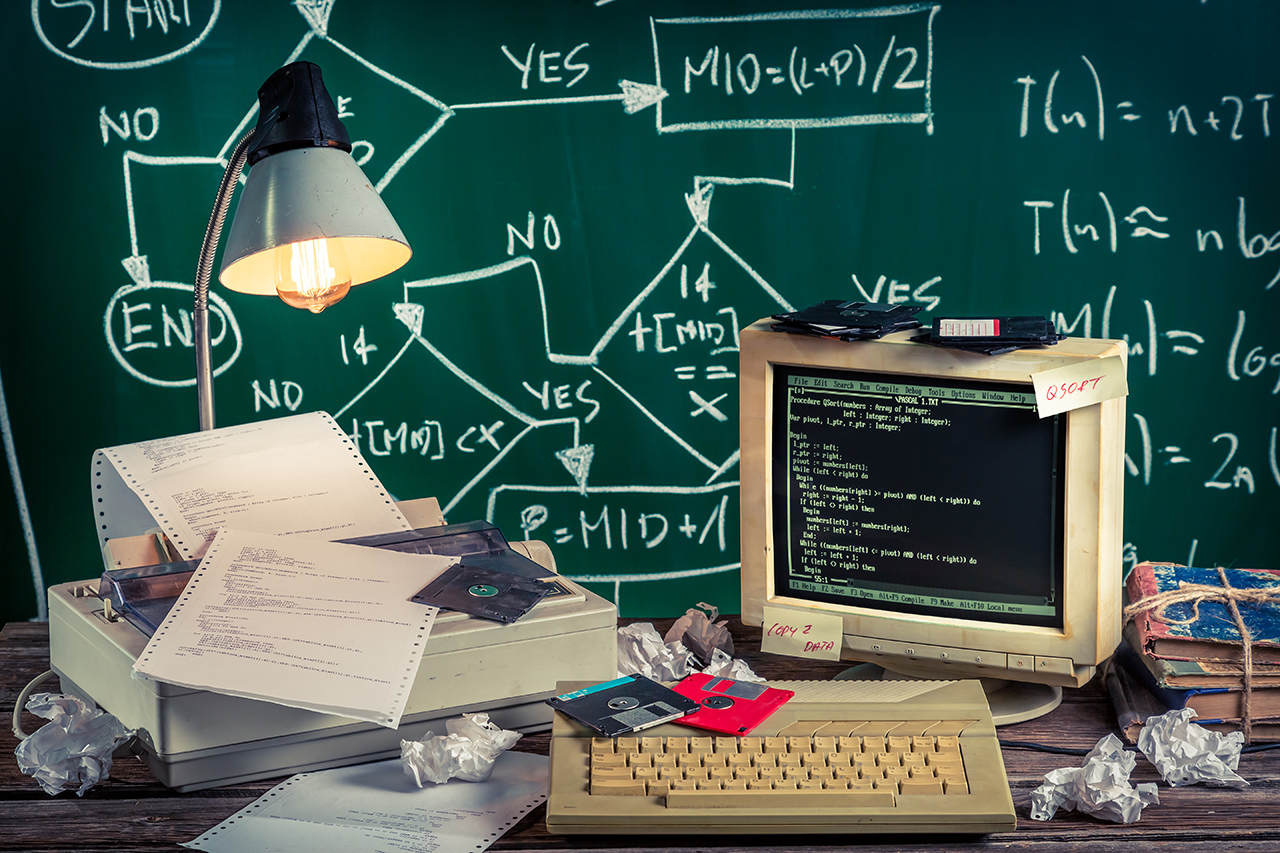

In the 1960s and 1970s, the invention of the microprocessor and the personal computer revolutionized computing. The microprocessor made it possible to build computers that were smaller, faster, and more affordable. The personal computer brought computing power to the masses and paved the way for the internet and mobile computing.

In the 21st century, we have seen a new era of computing emerge. Artificial intelligence and machine learning technologies have enabled computers to perform tasks that were once thought to be the exclusive domain of humans. These technologies are being used to develop self-driving cars, personalized medicine, and intelligent virtual assistants.

The personal computer revolution of the 1980s and 1990s brought computing power to the masses. The introduction of the graphical user interface (GUI) made it easier for non-technical users to interact with computers. The internet and mobile computing have since transformed the way we communicate, work, and live our lives.

Artificial intelligence (AI) and machine learning (ML) technologies are now driving the latest phase of computing evolution. These technologies are being used to develop self-driving cars, personalized medicine, and intelligent virtual assistants. They are also being used to analyze large datasets and make predictions and decisions in real-time.

The history of computing is also marked by the contributions of many computer pioneers. These are the individuals who had the vision, drive, and technical expertise to develop new technologies and push the boundaries of what was possible. Some notable computer pioneers include Ada Lovelace, who is credited with writing the first algorithm designed to be processed by a machine, Alan Turing, who played a key role in cracking the German Enigma code during World War II and is widely considered the father of computer science, and Steve Jobs, who co-founded Apple and was instrumental in bringing personal computing to the masses.

The history of computing has changed dramatically over time. The first mechanical calculators and computers were large, cumbersome, and only accessible to a few people. Today, we carry more computing power in our pockets than the first electronic computers ever had. Let’s take a closer look at some of the key changes that have taken place in the history of computing.

-

Size and Accessibility

One of the most obvious changes in the history of computing is the size and accessibility of computing devices. The first mechanical computers were massive machines that required entire rooms to house. Later electronic computers were still large and expensive, and only accessible to governments and large corporations.

-

Processing Power

Another major change in the history of computing is the processing power of computers. Early computers could perform basic arithmetic functions, but modern computers are capable of performing complex calculations, running multiple applications simultaneously, and processing massive amounts of data in real-time.

-

User Interfaces

User interfaces have also changed dramatically in the history of computing. Early computers had no graphical user interface (GUI), and users interacted with the machine using punch cards or text-based interfaces. The development of the GUI in the 1980s and 1990s revolutionized the way we interact with computers, making it easier for non-technical users to access and use computing technology.

-

Connectivity

Connectivity has also been a major driver of change in the history of computing. The development of the internet and mobile computing has transformed the way we communicate, work, and live our lives. We are now able to access vast amounts of information, communicate with people all over the world, and work remotely from almost anywhere. The internet has also enabled the development of new technologies, such as cloud computing and artificial intelligence, which are changing the way we use and interact with computers.

In conclusion, the history of computing is a story of innovation, invention, and evolution. The evolution of technology has been a key driver in the development of computing, and computer pioneers have played an essential role in pushing the boundaries of what is possible. As we look to the future, it’s clear that computing will continue to shape the world we live in, and we can only imagine what breakthroughs will come next.